I am currently a fifth-year Ph.D. student at the College of Computer Science and Technology, Zhejiang University supervised by Prof. Chao Wu. I also supervised by Kun Kuang and Fei Wu from Zhejiang University.

My research primarily focuses on the alignment and reasoning enhancement of multimodal large language models and vision-language models, with a prior concentration on unsupervised domain adaptation and domain generalization. Recently, I have also been particularly interested in multi-agent systems for reasoning in MLLMs, exploring how collaborative interactions among agents can enhance reasoning capabilities in complex tasks.

I am on the job market and will graduate in the 2025 summer. I am open to both academic and industrial positions! Please contact me if you have matched positions.

🔥 News

- 2025.05: 🎉 Four co-author papers have been accepted to ICML 2025.

- 2025.04: 🥳 I will attend the ICLR conference in Singapore, welcome to discuss in person.

- 2025.02: 🎉 One co-author paper has been accepted to ICLR 2025 Workshop.

- 2025.01: 🎉 One first-author paper and two co-author papers have been accepted to ICLR 2025.

- 2024.07: 🥳 I went to Vienna, Austria to attend the ICML conference.

- 2024.05: 🎉 One first-author paper has been accepted to KDD 2024.

- 2024.05: 🎉 One first-author paper has been accepted to ICML 2024.

- 2023.10: 🥳 I went to Paris, France to attend the ICCV conference.

- 2023.07: 🎉 One first-author paper has been accepted to ICCV 2023.

- 2023.07: 🎉 One first-author paper has been accepted to ACM Multimedia 2023.

- 2023.05: 🎉 One paper has been accepted to KDD 2023.

- 2022.11: 🎉 One paper has been accepted to IEEE Transactions on Big Data.

- 2021.05: 🎉 One paper has been accepted to IJCAI 2021 FL Workshop.

📝 Publications

REMEDY: Recipe Merging Dynamics in Large Vision-Language Models

.

Didi Zhu, Yibing Song, Tao Shen, Ziyu Zhao, Jinluan Yang, Min Zhang, Chao Wu

- First exploration of the LoRA fusion problem in Multimodal Large Language Models

- Proposing a dynamic fusion scheme enhances zero-shot generalization capability of MLLMs.

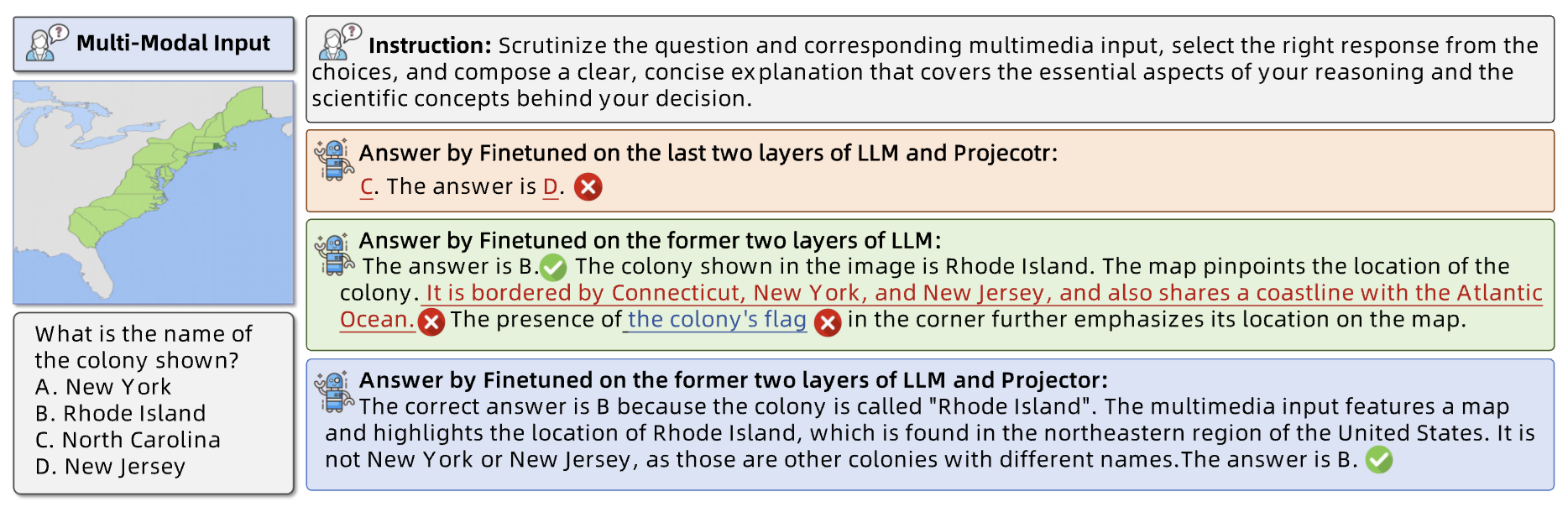

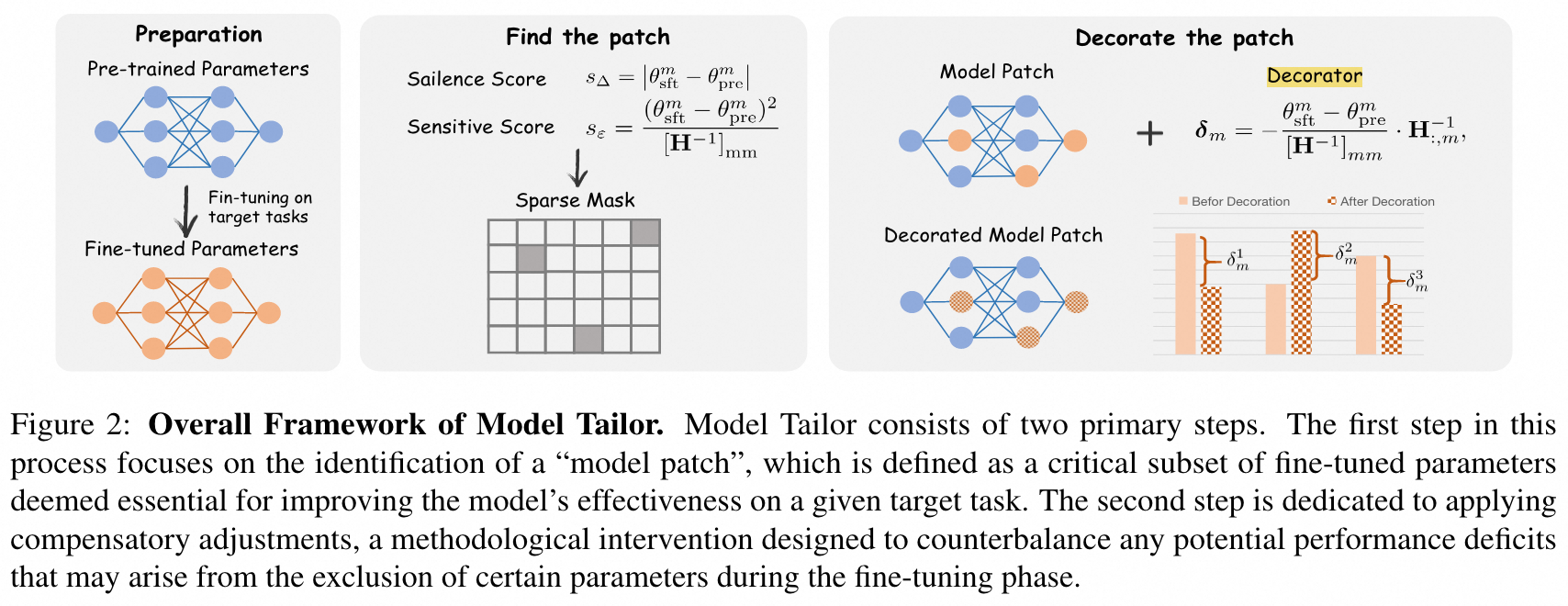

Model Tailor: Mitigating Catastrophic Forgetting in Multi-modal Large Language Models.

Didi Zhu, Zhongyisun Sun, Zexi Li, Tao Shen, Ke Yan, Shouhong Ding, Chao Wu, Kun Kuang

- Pioneered the first comprehensive exploration and revelation of catastrophic forgetting in MLLMs such as InstructBLIP and LLaVa.

- Addressed the issue through an innovative training-free model grafting technique.

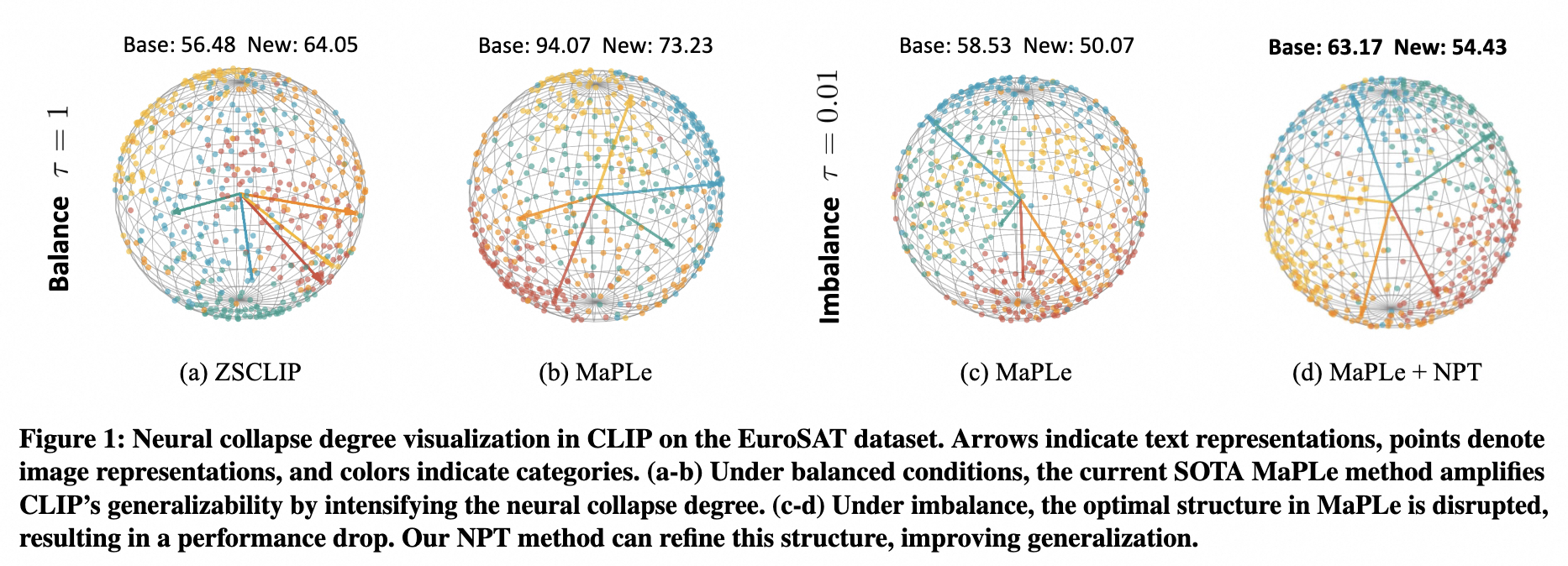

Neural Collapse Anchored Prompt Tuning for Generalizable Vision-Language Models.

Didi Zhu, Zexi Li, Min Zhang, Junkun Yuan, Jiashuo Liu, Kun Kuang, Chao Wu

- The first exploration of large vision-language models through the lens of neural collapse in deep learning theory.

- Tackle class imbalance in generalization tasks for large vision-language models by leveraging neural collapse theory.

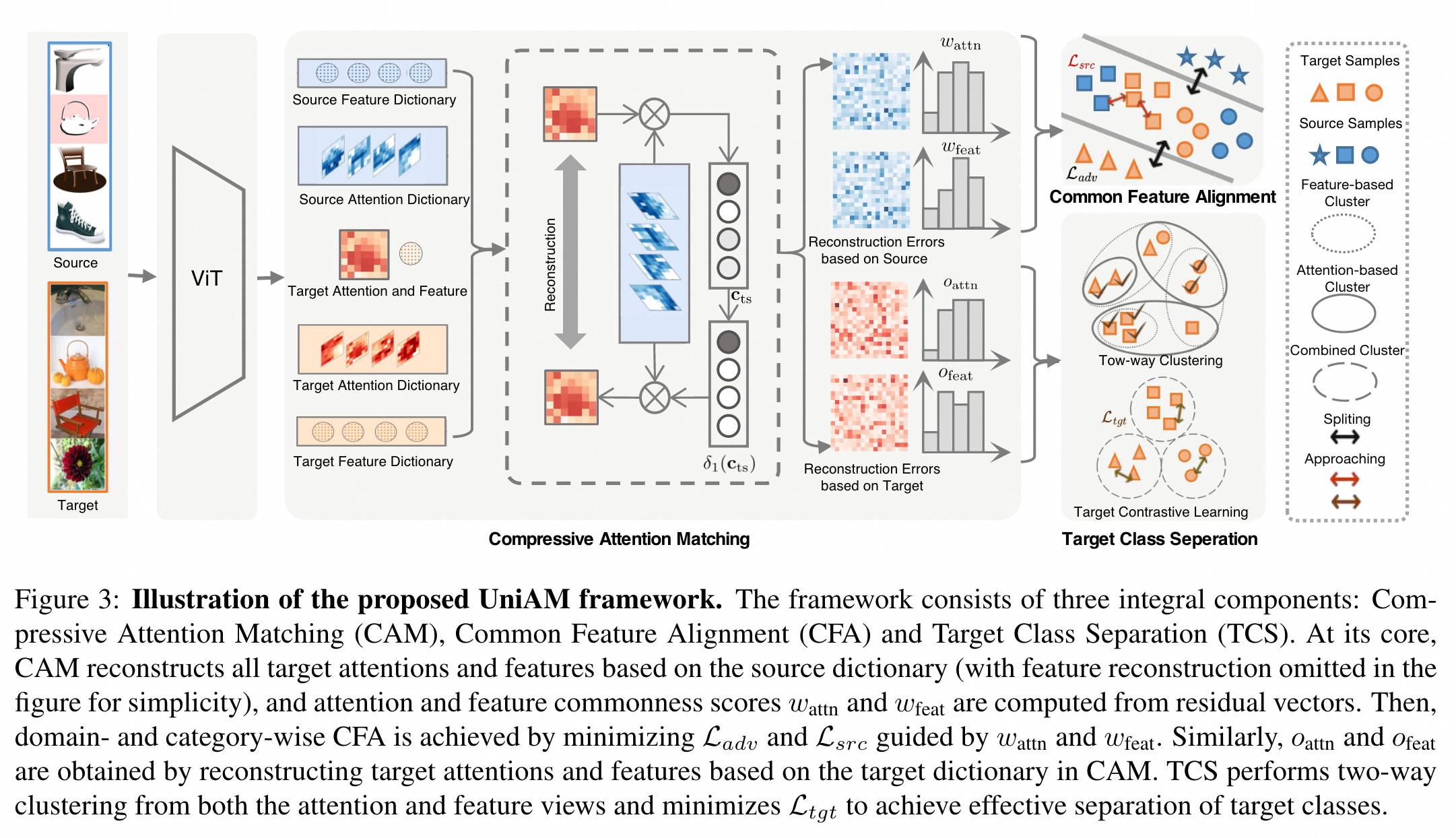

Universal domain adaptation via compressive attention matching.

Didi Zhu, Yinchuan Li, Junkun Yuan, Zexi Li, Kun Kuang, Chao Wu

- Addressed the issue of inconsistent source-target label spaces in Universal Domain Adaptation directly using self-attention in ViT.

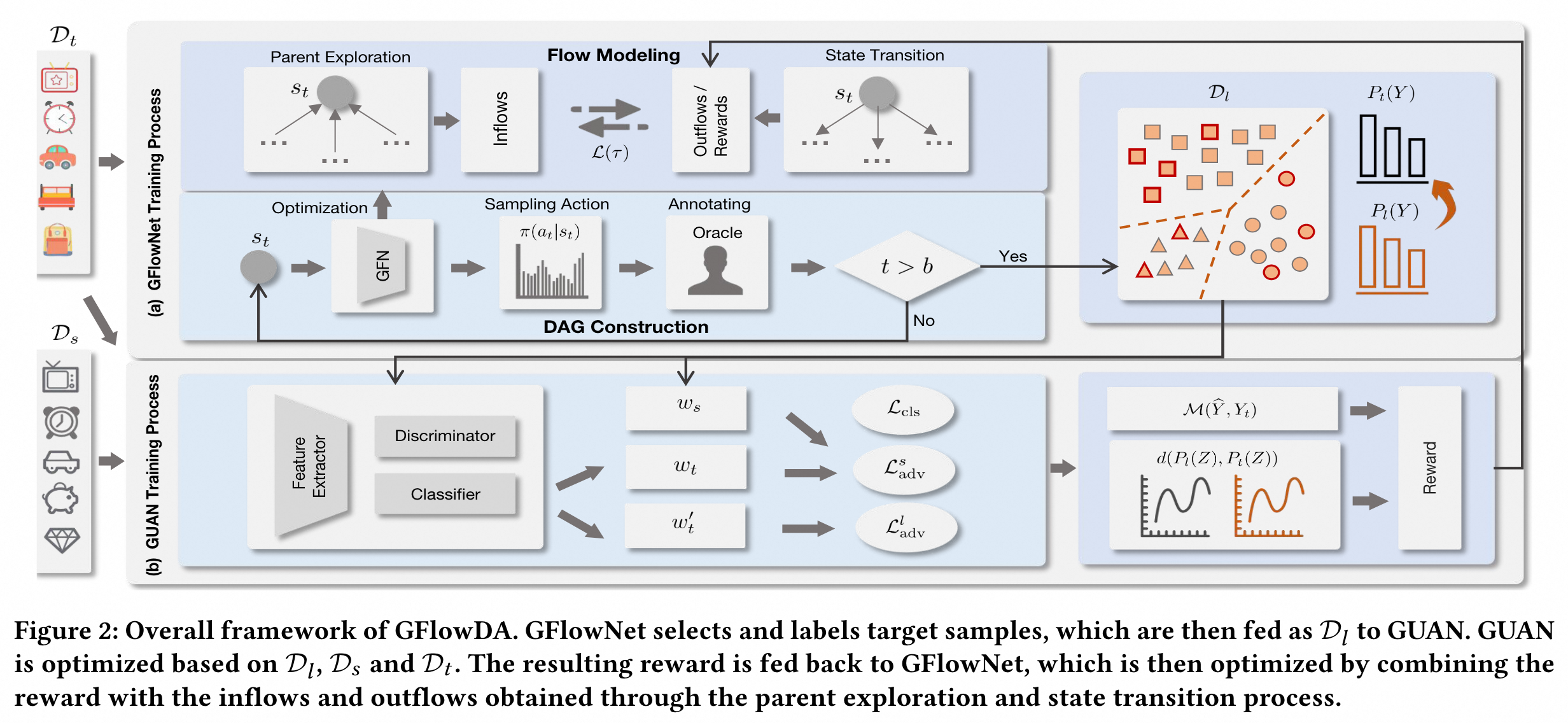

Generalized Universal Domain Adaptation with Generative Flow Networks.

Didi Zhu, Yinchuan Li, Yunfeng Shao, Jianye Hao, Fei Wu, Kun Kuang, Jun Xiao, Chao Wu

- Introduced a comprehensive problem called Generalized Universal Domain Adaptation, achieving a unification of all Domain Adaptation sub-problems involving label heterogeneity.

- Implemented an exploration-aware active learning strategy based on Generative Flow Networks to effectively address GUDA.

ICML 2025ZeroFlow: Overcoming Catastrophic Forgetting is Easier than You Think, Tao Feng, Wei Li, DiDi Zhu, Hangjie Yuan, Wendi Zheng, Dan Zhang, Jie Tang.ICML 2025Learn from Downstream and Be Yourself in Multimodal Large Language Model Fine-Tuning, Wenke Huang, Jian Liang, Zekun Shi, Didi Zhu, Guancheng Wan, He Li, Bo Du, Dacheng Tao, Mang Ye.ICML 2025Be Confident: Uncovering Overfitting in MLLM Multi-Task Tuning, Wenke Huang, Jian Liang, Guancheng Wan, Didi Zhu, He Li, Jiawei Shao, Mang Ye, Bo Du, Dacheng Tao.ICML 2025ERICT: Enhancing Robustness by Identifying Concept Tokens in Zero-Shot Vision Language Models, Xinpeng Dong, Min Zhang, Didi Zhu, Ye Jun Jian, Zhang Keli, Aimin Zhou, Fei Wu, Kun Kuang.ICLR 2025Mitigating the Backdoor Effect for Multi-Task Model Merging via Safety-Aware Subspace,Jinluan Yang, Anke Tang, Didi Zhu, Zhengyu Chen, Li Shen, Fei Wu.ICLR 2025Merging loras like playing lego: Pushing the modularity of lora to extremes through rank-wise clustering, Ziyu Zhao, Tao Shen, Didi Zhu, Zexi Li, Jing Su, Xuwu Wang, Kun Kuang, Fei Wu.NeurIPS 2024 WorkshopImproving Group Connectivity for Generalization of Federated Deep Learning, Zexi Li, Jie Lin, Zhiqi Li, Didi Zhu, Rui Ye, Tao Shen, Tao Lin, Chao Wu.KDD 2023Quantitatively Measuring and Contrastively Exploring Heterogeneity for Domain Generalization., Yunze Tong, Junkun Yuan, Min Zhang, Didi Zhu, Keli Zhang, Fei Wu, Kun Kuang.IEEE Transactions on Big DataTowards Effective Clustered Federated Learning: A Peer-to-peer Framework with Adaptive Neighbor Matching., Zexi Li, Jiaxun Lu, Shuang Luo, Didi Zhu, Yunfeng Shao, Yinchuan Li, Zhimeng Zhang, Yongheng Wang, Chao Wu.IJCAI 2022 WorkshopEnsemble federated adversarial training with non-iid data., Shuang Luo, Didi Zhu, Zexi Li, Chao Wu.

🎖 Honors and Awards

- 2024.12 National Scholarship (Top 5%)

- 2020.10 Beijing Outstanding Graduates (Top 1%)

- 2019.10 National Scholarship (Top 1%)

- 2018.10 National Scholarship (Top 1%)

- 2017.10 National Scholarship (Top 1%)

Educations

- 2020.09 - present, Phd student, Computer Science and Technology, Zhejiang University, Hangzhou.

- 2016.09 - 2020.06, Undergraduate, Computer Science and Technology, Beijing University of Chemical Technology, Beijing.

Internships

- 2023.10 - 2024.02, Tencent Youtu Lab, Shanghai.

- 2022.05 - 2022.11, Huawei Noah’s Ark Lab, Beijing.

Services

Reviewers

- NeurIPS 2025, ICCV 2025, ICLR 2025, KDD 2025

- KDD 2024, TIP, Machine Learning Journal, MM 2024

- MM 2023